Embracing Change in a Fast-Moving Startup

At least once a year I sail with my family. Nothing too fancy, a sail boat that we can operate ourselves and enjoy nature and family quality time. Surprisingly, a key element during those trips is the wind – direction and force, and the thing about it – it keeps changing!

Like the changing wind, life constantly changes. This is especially true in a fast-moving startup. What works well today may not be the right fit tomorrow. The ability to recognize when it’s time to pivot, optimize, or even completely overhaul a system is critical. As engineers, we have a tendency to fall in love with our decisions and the systems we build. But clinging to past choices can be a liability.

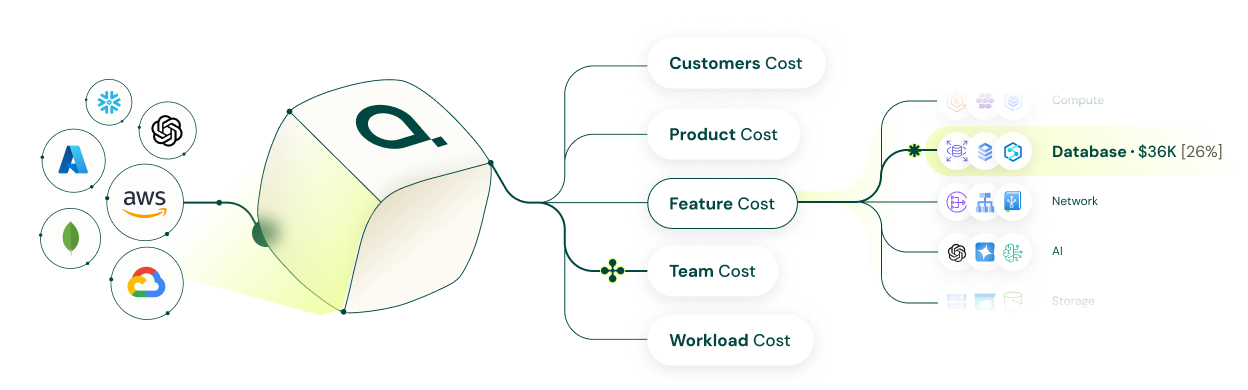

At Attribute, we fall in love with people, and not with what we build, so when the time came to revisit our data processing stack it was no surprise. Early on, we made the best infrastructure choices given our constraints. But as we scaled, those same choices became limitations, forcing us to rethink and adapt.

The Early Decision: Serverless Dataproc for Spark

When we started building our data processing infrastructure, we chose Serverless Dataproc to run our Spark jobs. At the time, this decision made perfect sense. As a lean team with limited engineering bandwidth, we didn’t have the luxury to build and maintain a self-managed Spark cluster. Serverless Dataproc allowed us to focus on delivering value without worrying about operational overhead. We could write our Spark jobs, deploy them easily, and let Google Cloud handle the rest.

The Growing Pains: Cost and Lack of Control

As our business grew, so did our data processing needs. More data meant more jobs, higher execution frequency, and eventually, skyrocketing costs.

We also started hitting operational bottlenecks. Dataproc’s black-box nature meant we had little visibility into job execution details, making it difficult to optimize performance and debug failures efficiently.

At this point, the disadvantages outweighed the benefits. It was clear that we needed to take back control.

The “Why” Behind the Migration

I have a simple rule when approaching any engineering project: it must start with a strong “why”. If there’s no compelling reason to do something, we shouldn’t waste our most valuable resource, time, on it. (Hint – Trying out new technology just because it’s “cool” is never a good enough reason).

For our Dataproc migration, the “why” was clear:

- Cost efficiency: Serverless Dataproc had become too expensive.

- Operational transparency: We needed better control and visibility into our Spark jobs.

- Scalability and flexibility: We wanted the ability to fine-tune our infrastructure for our evolving needs.

With these reasons driving us, we embarked on a focused project to migrate our Spark workloads from Dataproc to a self-managed Kubernetes-based solution.

The Migration: Moving Spark to Kubernetes

We decided to deploy our Spark jobs using Spark-on-Kubernetes, which allows running Spark applications as native Kubernetes workloads. Here’s a high-level breakdown of our approach:

- Infrastructure Setup:

- We provisioned a Kubernetes cluster on Google Kubernetes Engine (GKE) with autoscaling enabled to handle varying workloads.

- We configured a dedicated namespace for our Spark jobs, ensuring clear separation from other services.

- Spot instances are used to cut costs further.

- Job Execution with SparkApplication CRD:

- We leveraged the Spark Operator to manage Spark workloads as Kubernetes-native resources.

- This allowed us to define Spark jobs declaratively using Kubernetes Custom Resources (CRs), making them easier to manage and scale.

- Observability and Monitoring:

- We integrated Victoria Metrics and Grafana for real-time monitoring of job execution.

The Outcome: More Control, Lower Costs, and Better Monitoring

The migration resulted in significant improvements:

- Cost Reduction: By eliminating Dataproc’s managed overhead and optimizing resource usage, we cut our Spark processing costs by more than 70%.

- Increased Transparency: We gained deeper visibility into job execution, leading to better debugging and optimization. This also led to higher ownership of the Eng. team, the elimination of the black box is forcing us to do better engineering work.

- Scalability: Running on Kubernetes allows us to scale workloads dynamically without vendor-imposed limitations. Improving Spark performance, shortening the run time, also allowed us to increase our efficiency in features development, driving more product value.

Final Thoughts

Engineering is about solving problems in the most efficient way possible. Our decision to use Serverless Dataproc was right for us at the beginning, and our decision to move to Kubernetes was right for us as we scaled.

The key lesson? Stay adaptable. Don’t fall in love with your decisions, embrace the change – as it will come whether you like it or not.

I wonder when we will start feeling the friction with our current Spark application infrastructure 😉